by Marie Ferri, Jonas Simonin, Louis PetitJean

Needless to outline the profound opportunities ushered by the recent rise of Generative AI for both individuals and businesses. The never-observed-before pace of adoption compared to other technologies speaks for itself. Like any other emerging technology, and particularly given its rapid adoption, this wave of profound opportunities brings forth concerns around privacy and security.

This article aims to explore the various ways GenAI, LLMs in particular, and cybersecurity interact, from novel security threats to enhanced cyber resilience.

The consumer-facing nature of models has accelerated the spread of Generative AI across companies and deepened their reliance on it. As GenAI becomes more integrated into IT systems and employees’ daily tasks, new vulnerable surface areas emerge along with new security and privacy challenges. Securing models’ usage and promoting a safe internal implementation are key priorities to drive stronger adoption and more controlled usage of GenAI in the corporate world.

GenAI can also be leveraged by cyber offenders to scale and enhance their malicious operations. The technology opens the door to more sophisticated attacks, like social engineering or vulnerability discovery at a much larger scale than ever before.

Fortunately, GenAI can also be utilized by security practitioners to augment cyber resilience. With its advanced analysis and automation capabilities, GenAI can help the defensive side address some of the eminent wounds of the cyber industry such as the vast cybersecurity-talent gap, which counts more than 3M jobs unfilled.

Securing the deployment and usage of LLMs within organizations

The enticing opportunities presented by GenAI have led many organizations to explore its potential applications. However, transitioning from experimental stages to full-scale production presents multiple challenges. These include managing extensive data requirements, addressing inference costs and latency issues, and crucially, ensuring the security and privacy of both the models and data.

Security and privacy are related yet distinctly different challenges. Often, security entails building an impenetrable wall to safeguard data. However, privacy requires a more nuanced approach, allowing certain data to be accessible under controlled conditions. This is crucial as businesses do not store data merely to lock it away indefinitely. This balance between security and privacy has been a cornerstone of data regulation for the last twenty years, particularly with the advent of GDPR in the EU. However, as we move from traditional databases—where data can be neatly arranged and easily deleted—to AI models, the complexity increases. With deep learning and neural networks that power LLMs, the straightforward “delete” functionality is absent. In other words, once customer data, proprietary information, or employee details are integrated into an LLM through training, inference, or other processes, it becomes almost impossible to remove that data completely.

Security forms a crucial pillar of reliability and the safe integration of AI in business processes. Given the complexities of AI models, embedding security, explainability, and transparency from the design phase through deployment is essential.

LLM Vulnerabilities

LLMs not only encompass the general risks associated with AI models but also carry specific vulnerabilities unique to GenAI and the direct interactions that users may have with them. Studies from institutions like MITRE and OWASP have highlighted these vulnerabilities, underscoring their significant impact on AI systems.

- Manipulation attacks target the operational behavior of AI systems with malicious requests that could distort responses, initiate dangerous actions, or cause service disruptions. For example, an attacker might overload the model with excessive requests, leading to a denial of service that compromises the model’s availability and incurs significant resource costs.

- Infection attacks pose threats during the AI’s training phase, where alterations in training data or the insertion of backdoors can severely compromise the system. One common tactic performed by offenders is training data poisoning, where attackers deliberately manipulate data to embed vulnerabilities and biases within the model.

- Exfiltration attacks pose a significant concern for companies, as they involve extracting valuable information from AI systems during production. This includes the model’s training data, sensitive internal parameters, and other proprietary information, compromising privacy and intellectual property rights.

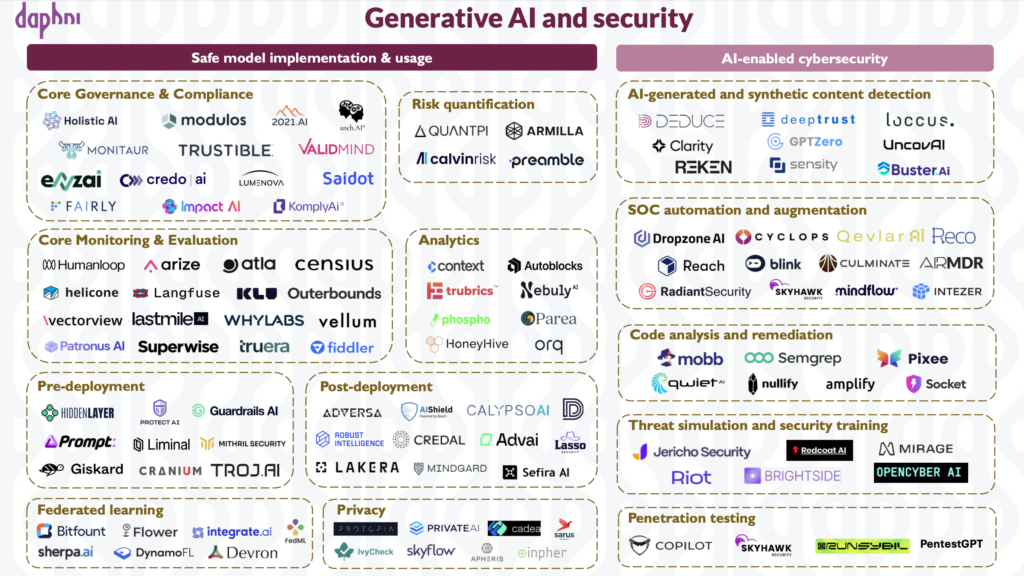

In response to these privacy and security challenges, several companies are emerging to help secure model implementation and promote safe consumption.

Governance & Compliance

Governance and compliance are critical pillars that ensure AI systems operate within the correct legal and ethical frameworks. These systems often include centralized inventories or registries, allowing companies to effectively oversee all AI and ML projects. Such solutions not only categorize projects by potential revenue, impact, and risk but also support strategic decision-making, making it easier for businesses to align their AI practices with both internal standards and external regulations.

Companies like Holistic AI offer a comprehensive solution for conducting AI audits to evaluate systems for bias, validate the development and deployment of models and facilitate compliance with AI regulations, such as the EU AI Act and AI-RMF.

Risk quantification

Solutions such as Calvin Risk focus on evaluating technical, ethical, and regulatory risks through a modular approach tailored for AI analytics and validation teams. As traditional risk model validation evolves towards AI-driven methodologies, they are developing expert validation co-pilots to make the validation process both faster and more cost-effective.

Observability

Observability encompasses a suite of tools designed to monitor, evaluate, and analyze the performance and security of these models throughout their operational lifecycle.

Monitoring systems like Arize and Tuera track model performance across various metrics to alert teams on performance declines and facilitate root cause analysis to pinpoint and rectify underlying issues. Other evaluation tools like Patronus assess score model performance while performing some testing at scale to find edge cases where models might fail.

Analytics solutions allow AI and product teams to automatically monitor user interactions with models. They facilitate the collection, analysis, and management of user prompts and feedback, enabling AI teams to optimize model performance based on real-world usage data.

Deployment security

It is critical for companies to undertake pre-deployment assessments to ensure LLMs’ secure operations. This stage aims to minimize potential vulnerabilities and prioritize data sanitization.

Companies like Hiddenlayer and Cranium focus on monitoring AI environments and infrastructures to detect and respond to adversarial attacks. Other startups like Protect AI focus on embedding sanitization mechanisms directly into models to shield them against data breaches and prompt injection threats.

Once models are deployed, companies need to continuously monitor these systems, and address security incidents.

Techniques like red teaming are employed to identify and mitigate potential risks such as prompt injections, data leaks, and biased outputs. Tools like Lakera Red stress-test AI systems against various security threats to monitor and rectify issues related to data privacy and compliance.

AI firewalls like Robust Intelligence allow companies to monitor inputs and outputs and avoid data leakage, such as preventing employees from feeding a model with confidential data.

Synthetic data and Federated learning

To meet the need for training models on extensive datasets, including company-specific and proprietary data, while ensuring data security, federated learning is a viable solution. Startups like DynamoFL and Flower help companies keep data local, significantly reducing the need for central data exchange. Moreover, startups that generate synthetic data enable companies to preserve privacy and enhance limited or biased datasets by creating data that mimics real-world conditions, thus providing richer training environments without containing sensitive information.

Companies and security teams will increasingly require robust tools to assess and continuously monitor AI systems. The non-deterministic nature of LLMs will undoubtedly drive the development of specialized tools tailored for LLM testing, evaluation, observability, and security.

Amplifying risks: how generative AI escalates cyber threats

The emergence of GenAI and LLMs has significantly escalated the broader cyber threat landscape, beyond risks linked to the implementation and use of models by companies and individuals. Cybercriminals leverage these models’ capabilities to run more sophisticated, larger-scale operations. By providing readily available tools and techniques that require less specialized knowledge and expertise, generative AI lowers the barrier to entry to cyber offense. We highlighted below some of the pressing threats powered by generative AI.

Malicious code generation

Specialized tools like FraudGPT give anyone rapid access to malicious code ready to be deployed, including highly complex malware such as polymorphic malware crafted to evade security measures. To counter these increasingly sophisticated attacks, solutions like Upsight Security use deep analysis of code to predict the evolution of malware and attacks. Additionally, hackers can exploit AI hallucinations: AI chatbots might mistakenly mention software packages that don’t actually exist. Hackers can then create harmful open-source packages with these names. When developers look for these suggested packages and use them, thinking they are legitimate, they could introduce security risks into their systems.

Crafting and scaling sophisticated phishing emails

Generative AI tools enhance hackers’ ability to exploit employees’ vulnerabilities, by enabling the production of more compelling, larger-scale phishing emails. SlashNext’s state of phishing report revealed that chatGPT’s launch led to a 12x increase in malicious phishing emails. LLMs can understand employee-specific contexts and adjust the discourse and tone of an email accordingly. They also lose typical signals of phishing emails such as poor sentence quality and typos. This makes it increasingly challenging for individuals to differentiate between fraudulent and genuine content. Even though chatGPT cannot generate tricky phishing text anymore, some dedicated tools like WormGPT continue to provide such capabilities to help counter such threats, startups focusing on detecting AI-generated content, such as Uncov AI and GPTZero, are emerging, while others, like Brightside AI, offer awareness and training campaigns through personalized phishing simulations.

Synthetic content generation powering stronger identity fraud techniques and disinformation

Advancements in AI algorithms, increased computing power, and extensive data availability have improved the realism of deepfakes, making them hardly distinguishable from real content, and significantly reduced generation costs. For instance, the computing cost of voice cloning has dropped from thousands to just a few dollars, further eliminating accessibility barriers.

The potential use cases for hackers range from massive disinformation on social networks to any fraud or social engineering manipulation powered by fake image, video, or even voice content. The generation of false identities creates new challenges in identity verification systems, opening the door to more fraud.

Companies such as Sensity detect deep fake injection in KYC and fraudulent document use, Clarity AI aims at countering social engineering manipulation, alerting participants in real time about the presence of deep fake content in meetings. Other players are focusing on the mode of generation like Loccus AI, which offers voice-based identity and authenticity verification solutions, for use cases such as preventing call centers fraud.

The detection of threats powered by GenAI is deeply embedded in the ongoing arms race between cyber offenders and defenders, necessitating innovative solutions to keep pace with the rapid development of generative capabilities.

Generative AI for greater cyber resilience

AI advancements also represent an opportunity for the cyber defendant side, namely to work faster and to be more productive. LLMs’ capacity to process large volumes of unstructured text, along with their contextual understanding and adaptive learning abilities, offers new opportunities for security teams. These models are valuable in tasks that require analyzing large volumes of information, summarizing, and generating content. We highlighted below some of the categories where we believe LLMs could have an important impact.

Security incumbents are starting to incorporate LLMs to complement their offers, such as Microsoft or Crowdstrike with Charlotte AI, its AI security analyst. Startups leveraging generative AI for security are also emerging on various use cases with an AI-first approach and could potentially move the needle through AI-native, more agile user-centric workflows.

SOC automation and augmentation

A famous challenge in the cyber industry is the flood of security alerts that security teams need to deal with – a trend that is getting worse through expanded IT ecosystems, the multitude of security tools employed, and now the growing capabilities of cyber offenders. Alerts investigation is a painful and manual process for security analysts. They are often overloaded with false positives, resulting in alert fatigue and unhandled alerts. A CISCO study found that 44% of alerts go uninvestigated, leaving substantial room for missed breaches.

AI security agents automate the investigation process to help security teams expand and speed up their threat analysis coverage. They can be particularly useful to review lower-priority alerts giving analysts more time to focus on more critical security activities. Unlike traditional automation tools that rely on predefined playbooks and manual workflow, LLMs can automate reasoning and follow sequential steps on a multitude of use cases. Models can also help narrow the security knowledge gap by suggesting best recovery practices to cyber analysts, learning from past incidents, and automating incident response reports. Dropzone AI has developed an AI SOC analyst that replicates techniques of cyber analysts to perform end-to-end alert investigations, prioritizing high-value alerts with in-depth reports for security professionals to evaluate.

Code analysis and vulnerability remediation

LLMs are not only a powerful tool for writing malicious code, they can also serve beneficial purposes in defense. For instance, security teams can use LLMs to retro-engineer malware for a better understanding of its purpose and adapt their strategies. They can also be used in developers’ environments to improve coding practices through continuous code evaluation, vulnerability identification, and fix suggestions. LLMs strengthen static scanning processes, adding a contextual understanding of dependencies and more seamless integration within developers’ environments to promote early detection in the development process. Product security copilots such as Mobb provide an automatic vulnerability fixer directly into developers’ workflow.

Security awareness training

Human error remaining the primary cause of most cyber attacks, employee-centric continuous sensibilization strategies and security training are paramount. GenAI unlocks the potential to create truly personalized security training programs for security professionals and employees. The technology can be used to create more realistic scenarios, interact with the user, and adapt the training content based on individual roles and behaviors. AI security assistants can provide guidance for employees in their daily tasks and provide feedback based on user interactions. OpenCyberAI provides a virtual AI mentor training professionals through a dynamic and adaptive learning environment. Mirage Security offers next-gen security training through Vishing simulations, leveraging deep fake voice clones and a mapping of the employee’s publicly available information for custom attack simulation.

Penetration testing

Just like offenders use LLMs to scale and enhance their creativity in the generation of malware, security teams can leverage LLMs to augment red teaming operations. GenAI can enhance pentesters’ work by intensifying infrastructure scanning, searching and incorporating the latest offensive techniques, identifying vulnerabilities, and generating automated reports. Increased automation represents a considerable opportunity for service providers to enhance the economics and scale of their operations.

As the threat landscape grows more complex, we believe AI and LLMs will be increasingly integrated within cyber tools and will empower security teams on further use cases. New leaders will emerge to facilitate the safe and scalable deployment of models, while also building greater cyber resilience to combat a stronger threat landscape.

Daphni is actively looking at the AI security space. If you are working on a project related to securing the implementation and usage stack, synthetic content identification, or leveraging GenAI to enhance security practices, we would love to hear from you! Apply here